Building record.a.video part 2: The screenCapture API

April 6, 2021 - Doug Sillars in Video upload, JavaScript

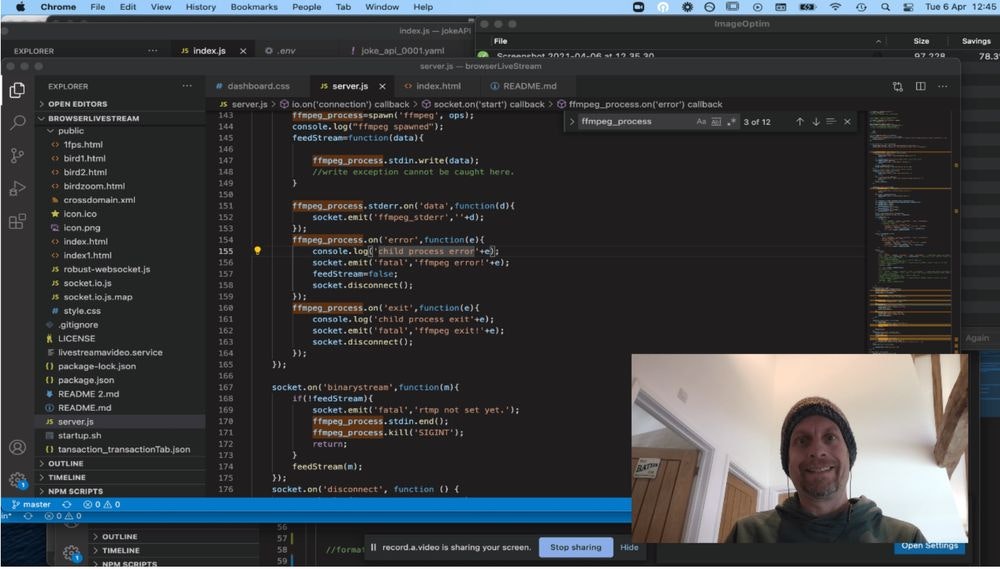

We’ve just released record.a.video, a web application that lets you record and share videos. If that were not enough, you can also livestream. The page works in Chrome, Edge, Firefox, Safari (14 and up), and on Android devices. This means that the application will work for about 75% of people using the web today. That’s not great, but since there are several new(ish) APIs in the application, it also isn’t that bad!

This is part 2 of the continuing series of interesting webAPIs that I used to build the application.

In post 1, I talked about the getUserMedia API to record the user's camera and microphone.

In post 2, I discussed recording the screen, using the Screen Capture API.

Using the video streams created in posts 1& 2, I draw the combined video on a canvas.

In post 3, I'll discuss the MediaRecorder API, where I create a video stream from the canvas to create the output video. This output stream feeds into the video upload (for video on demand playback) or the live stream (for immediate and live playback).

In post 4, I'll discuss the Web Speech API. This API converts audio into text in near real time, allowing to create 'instant' captions for any video being created in the app. This is an experimental API that only works in Chrome, but was so neat, I included it in record.a.video anyway.

You can also review the api.video API reference documentation for live streaming: Live streams

Sharing the screen

The navigator.mediaDevices.getDisplayMedia is the command I'll need to use to grab the screen.

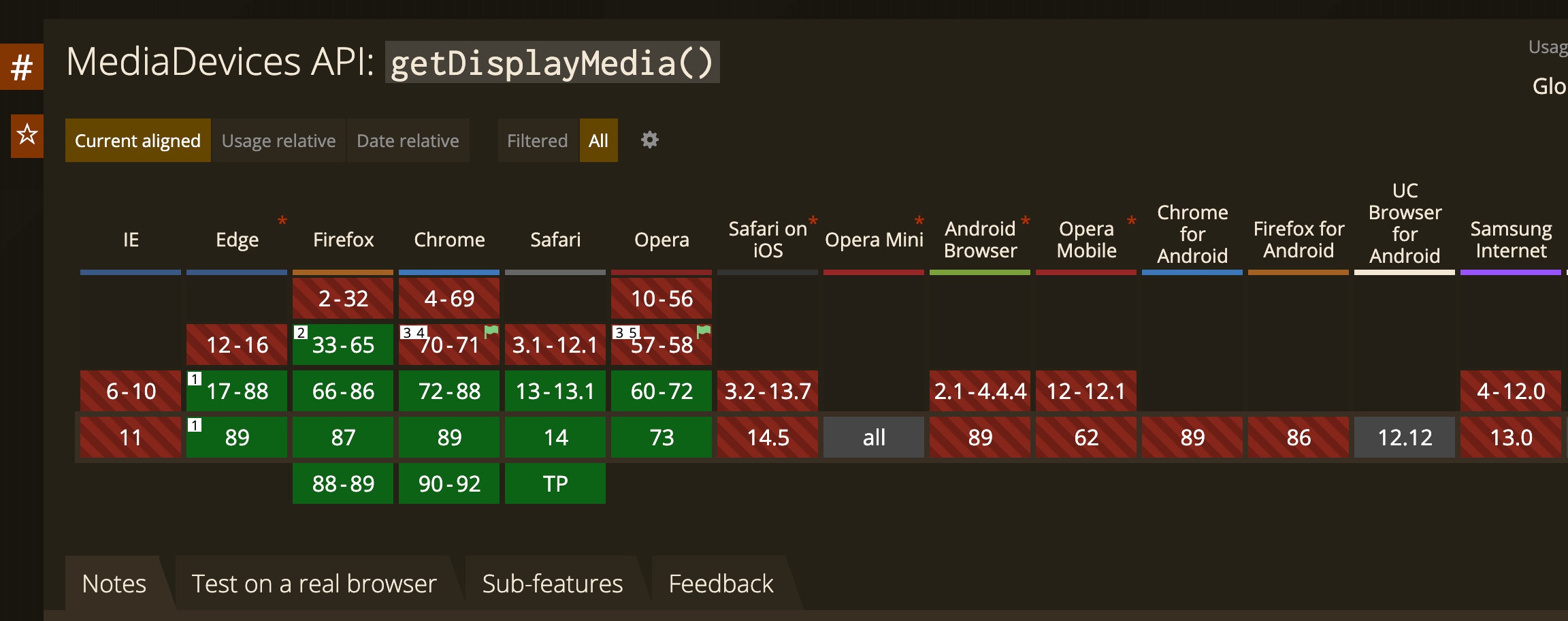

When we look at the support for getDisplayMedia on caniuse:

It is clear that there is good support on desktop browsers, but no support on mobile. This makes sense, as the only thing you'd be able to share would be the webpage that is open. But, since I'd like the app to work on mobile, we'll block screen sharing,and only allow the camera to be shared:

if("getDisplayMedia" in navigator.mediaDevices){

console.log("screen capture supported");

}else{

console.log("screen capture NOT supported");

cameraOnly = true;

}I want the screen display to appear in the videoElem video tag:

videoElem = document.getElementById("display");

//only thing is muting the audio

//this prevents awful feedback..

displayMediaOptions = {

video: {

},

audio: false

};

if(!cameraOnly){

//share the screen - it has not been shared yet!

videoElem.srcObject = await navigator.mediaDevices.getDisplayMedia(displayMediaOptions);

screenShared = true;

}else{

//screen cannot be shared, so grab the front facing camera.

videoElem.srcObject = await navigator.mediaDevices.getUserMedia(rearCameraMediaOptions);

screenShared = true;When you call getDisplayMedia, you can add constraints to the capture. In my case, I am grabbing the audio from a different stream, so I don't need the audio from the screen. In the comment, I mention awful feedback - even if you have no microphone, you can get awful feedback. For example, if the user is listening to music, the getDisplayMedia captures that, and plays it back (at a very high volume) leading to deafness in your users.

We ran into this issue with our livestream app on api.video dashboard, where I was temporarily deafened when I tested the app (and was listening to music).

Please do not do this to your customers!

When the page asks to share your screen, a popup will appear asking you which window or screen you'd like to share - you can share your entire screen, or just one application.

Putting the 2 video feeds together

Now that I have a video feed from the camera and from the screen, the listener for video playing on the videoElem fires:

videoElem.addEventListener('play', function(){

console.log('video playing');

//draw the 2 streams to the canvas

drawCanvas(videoElem, cameraElem,ctx);

},false);drawCanvas is a function that combines the videos and draws them to the canvas. (It also draws the captions, which I will cover in a future post.

First, we initialize the location of the camera and the screen. I'm only showing the default (camera at bottom right), but these care changed based on the layout requested by the user:

//set xy coordinates for the screen and cameras

//bottom right camera

//big screen

screenX0 = 0;

screenY0 = 0;

screenX1 = ctx.canvas.width;

screenY1= ctx.canvas.height;

cameraX0 = .625*ctx.canvas.width;

cameraY0 = .625*ctx.canvas.height;

cameraX1 = ctx.canvas.width/3;

cameraY1= ctx.canvas.height/3;

When drawCanvas is called, it can grab the screen and the camera, and then place them appropriately on the canvas.

//once the cameras and views are picked this will draw them on the canvas

//the timeout is for 20ms, so ~50 FPS updates on the canvas

function drawCanvas(screenIn, cameraIn,canvas){

var textLength = 60;

canvas.drawImage(screenIn, screenX0,screenY0, screenX1, screenY1);

canvas.drawImage(cameraIn, cameraX0, cameraY0, cameraX1, cameraY1);

//write transcript on the screen

if(interim_transcript.length <textLength){

ctx.fillText(interim_transcript, captionX, captionY);

}

else{

ctx.fillText("no captions", captionX, captionY);

}

setTimeout(drawCanvas, 20,screenIn, cameraIn,canvas);

}Try this in action

At record.a.video, you can see this in action - well, you can test it if you are on a desktop browser (see above).

Conclusion

The getDisplayMedia is an easy to use API that allows you to share your screen on the browser. Once you have access to the screen's video feed, you can stream it, or save it using the MediaRecorder API (which will be the next post in this series). To start building your own, you can create an account on api.video or ask questions on our community forum.

Follow our latest news by subscribing to our newsletter

Create your free account

Start building with video now